26 January 2026 | Monday | Opinion | By Dr Ben Sidders, Chief Scientific Officer, Biorelate

AI's presence in pharma & animal health is rapidly expanding – the global AI pharmaceutical market is expected to grow to tens of billions of dollars by 2032, and 75% of pharma companies have made AI a strategic priority in 2025. Shareholders, however, are facing up to a sobering reality: the failure rates of AI in pharma remain painfully high. According to MIT, 95% of enterprise AI pilots fail to generate measurable revenue. This raises a vital question: are pharma's AI investments mere 'fool's gold', or can rigorous, evidence-based metrics provide clarity and ensure genuine return on investment?

Interrogating the evidence behind the enthusiasm

Pharma's rapid adoption of AI has been fuelled by promises of revolutionary drug discovery efficiencies and cost reductions. The enthusiasm has led to significant capital inflows, partnerships, and pilots aiming to harness AI's predictive power for molecule identification, clinical trial optimisation, and safety monitoring.

Yet the hype risks outpacing the real impact, sparking concerns over a bubble that could burst with far-reaching consequences. Google's CEO has warned that no sector, including pharma, will be immune if the AI bubble collapses. This warning accentuates the need for pharma to critically assess its AI strategies and avoid unchecked optimism by embedding rigorous, evidence-backed ROI metrics that prioritise sustainable innovation over hype-driven investment.

Over the past decade, pharma has assembled a wide range of AI partnerships, proof-of-concept studies, and exploratory pilots. Many have produced genuinely impressive technical feats. Some systems propose molecular scaffolds that would never emerge through traditional medicinal chemistry. Others sift through layers of transcriptomic or proteomic data with sensitivity that conventional statistical methods struggle to match. Certain models even trace patterns across scientific literature that would take human teams months to assemble.

The difficulty arises when teams try to connect these capabilities to the practical decisions that underpin research portfolios. Discovery doesn't advance simply because an idea is interesting. It advances when the evidence behind a target becomes coherent enough to withstand scrutiny from biology, chemistry, safety, translational science, and commercial leads. In early discovery, the influence of AI is harder to quantify because there are so many factors that affect the ultimate decision: to progress a target to clinical trials or not. An isolated AI result, even a sophisticated one, rarely changes the overall picture unless it contributes directly to a question already under consideration. Without that alignment, the output drifts to the edges of decision-making – respected for its ingenuity, but uncertain in its influence. AI often has clearer, more measurable effects in later phases of drug development where the questions are more defined, and this contrast explains some of the uncertainty around its role in target selection and validation.

This challenge is reinforced by how decisions take shape inside research organisations. Findings are collated from disparate disciplines, often at a very high level. Data science teams have not traditionally been a part of this decision-making process. When AI produces a new insight, the result might be solid in its own right, yet difficult to relate to the broader narrative of a target. Teams need a coordinated way to judge how it fits into the assessment of a target.

Measuring real-world AI efficacy beyond the rhetoric

To cut through the noise, pharma must turn to evidence-led ROI metrics that extend beyond surface-level claims. Progress in early discovery tends to emerge through small shifts in confidence rather than dramatic leaps, which is why teams often struggle to judge what an AI output actually changes. A convincing computational result can catch attention, but decisions depend on how well the full body of evidence holds together.

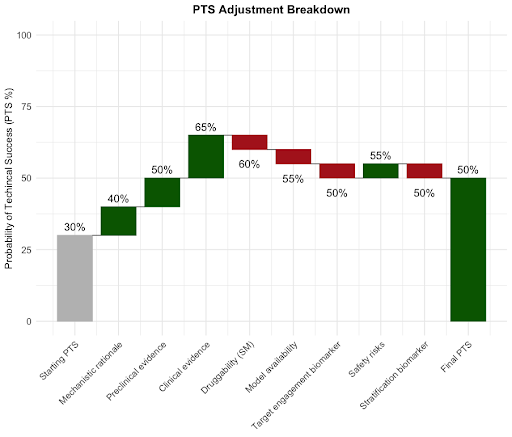

One promising approach is the Probability of Technical and Regulatory Success, or PTRS. This metric forecasts a project's likelihood of success across clinical and regulatory pathways. Traditionally applied to later-stage clinical assets, we can reuse the concept (as straight PTS, no R) to offer a structured approach in earlier phases of drug discovery. This would provide a quantifiable framework for target evaluation and, crucially, enable tracking of AI contributions. Expressed as a percentage (0–100%), PTS as envisioned here would represent the probability that a target will progress through early discovery & be ready to enter clinical validation.

PTS assessment works by evaluating evidence across multiple categories; each pharma company defines which categories of knowledge are important to them and their relative value. For example, strong mechanistic rationale (+10%), robust clinical evidence (+15%), identified safety risks or class-related adverse events (-10%). The knowledge of a target is evaluated against these categories to provide a baseline; typically, this is in the range of 10 to 30% PTS. The impact of any new knowledge derived from AI can then be re-evaluated against the same categories: if the PTS score increases, AI quantifiably drives value; if it decreases, it can help a pharma company “de-select” a bad target or programme.

In the example shown in the image (Figure 1 below), the target has a starting PTS of 30%. An AI tool that leverages causal knowledge from the literature is then able to fill in key evidence for mechanistic rationale, pre- & clinical evidence, and safety risks. This drives the PTS score up to 50%. However, key knowledge gaps remain (those in red) and these should be prioritised to de-risk the target.

Figure 1. PTS changes guided by AI-assisted evidence assessment

When this logic is adapted for early research, the pieces that shape confidence become more visible: scientists and portfolio leads begin to articulate what they view as reliable evidence and where they see uncertainty; assumptions that would otherwise sit unspoken come to the surface; differences in how teams weigh risk become easier to reconcile. AI becomes simpler to evaluate once this shared framework exists, because its outputs can be compared against criteria the organisation already trusts rather than assessed as isolated technical achievements.

With PTS, the financial implications become tangible, too, by calculating the expected net present value (eNPV). For example, if AI can help define a mechanistic rationale that increases PTS by 10 percentage points for a drug with a peak year sales (PYS) scenario of $275M, we can infer that the AI result has protected the realisation of that value proportionately (i.e $27.5 million gain in eNPV). Now consider that the same approach could be applied to a 10-asset portfolio, all with a starting PTS of 30%, and was successful in just half of the projects. In this scenario, the average PTS rises from 30 to 35%, a 16% relative improvement corresponding to an estimated $137.5 million gain in eNPV across the portfolio. These aren't abstract numbers. They're the kind of figures that finance teams, governance committees, and investors can scrutinise and act upon.

Put simply – adopting PTS as a universal yardstick could provide pharma with an early, quantifiable measure of AI's potential financial return, helping distinguish genuine value from mere technological novelty.

AI as a scientific decision-making tool, not a magic bullet

AI is often talked about as a shortcut to drug discovery, but the reality is that AI initiatives are often pursued in isolation. When AI is aligned with the priorities and needs of drug discovery, its relevance and impact increases. When it is not, the output stays in the realm of clever theory with little influence on investment or portfolio decisions.

This is why clear performance metrics are so important – giving teams a way to judge not whether an AI result looks impressive, but whether it moves a programme forward in a quantifiable and value-driven way. For executives and investors, this structure is vital. It anchors AI’s contribution to something that can be interrogated with the same discipline applied to any other scientific input. Thus, with the right metrics in place, AI’s role in pharma becomes easier to value, easier to trust, and ultimately easier to fund.

If AI is going to support decisions with real scientific and financial consequences, it has to do so in a language that speaks to scientists and investors alike. Defined in this way, AI finds its proper place in discovery. Not as a magic bullet, but as a tool that sharpens judgement, organises evidence, and makes risk more visible.

Is this technology making discovery more reliable?

As the pharma industry faces the risk of the AI bubble bursting, the need for rigorous, standardised metrics has never been more critical. Pharma’s push into AI is ambitious, necessary, and unlikely to reverse. But ambition alone cannot answer the central question facing investors and executives: is this technology making discovery more reliable, or simply adding complexity without improving decisions? Without a clear way to evaluate AI’s ROI, the industry risks accumulating sophisticated tools without improving its likelihood of clinical and commercial success.

Frameworks like PTS help cut through that uncertainty. They provide teams with a structured, transparent way to evaluate whether AI-derived insights genuinely strengthens the case for progressing a target, or introduces noise that slows progress. This turns small shifts in confidence into something companies can quantify and interrogate, using the same discipline they apply to any other scientific input – a crucial step for leaders deciding where to place capital and which technologies merit scale.

What emerges is a more grounded vision of AI's role in pharma. Not for shortcuts or guaranteed breakthroughs, but rather as a contributor to an evidence lifecycle that remains rigorous, cumulative, and aligned with the realities of scientific risk. AI becomes a tool that helps teams navigate complexity – not amplify it – by turning scattered information into clearer decisions that stand up to scientific and financial scrutiny.

Without robust metrics, AI risks being pharma's fool's gold – impressive on the surface but disappointing when pressure tested. With the right evaluative structure, it becomes something very different: a measurable asset that strengthens portfolios, accelerates progress, and ultimately benefits patients through more reliable discovery. As scientific challenges grow more complex and competitive pressures rise, this shift from enthusiasm to evidence-driven evaluation will shape not just the value of AI, but the future of R&D itself.

© 2026 Biopharma Boardroom. All Rights Reserved.